Faster Than “One‑Click”: Why Programmable Supply Chains Win on Speed

In supply chain, we talk a lot about speed. Speed of replenishment. Speed of response. Speed of recovery when something goes wrong. Yet when it comes to the systems meant to support these decisions, the conversation about speed quickly collapses to a single metric: how many weeks does it take to “go live” after signing a software contract?

Most large supply chain software vendors have a very clear answer to that question. They promise rapid time‑to‑value through preconfigured content, standard data models, and “industry best practices.” If you look at the marketing for integrated business planning suites and advanced planning systems, the narrative is remarkably consistent: start from their reference model, apply a collection of templates, and you can be in production in “a few weeks” or “as few as 12 weeks,” sometimes explicitly “from Excel to advanced planning in weeks.”

Recently, I tried to step back from this narrative in my book Introduction to Supply Chain, where I frame supply chain as the business of making profitable decisions under uncertainty, day after day. If we take that viewpoint seriously, the question “how fast can we deploy software?” becomes “how fast can we put high‑quality, automated decisions into production—and keep them aligned with the business as it changes?” That is a very different question.

From there, I arrive at a conclusion that goes against the mainstream: for serious supply chain initiatives, a programmable approach is not only more powerful than traditional packaged software; it is actually faster to get meaningful results into production.

Let me explain why.

The comforting promise of preconfigured supply chain software

Traditional enterprise planning vendors offer a comforting story.

They start from a canonical data model for supply chain: locations, products, hierarchies, bills of material, calendars, lead times, constraints. On top of this model, they ship preconfigured processes (“best practices”), sample datasets, template dashboards, alerts, standard algorithms, and configuration guides. All this material is explicitly designed to accelerate implementation and reduce project risk.

The logic is perfectly coherent. If every customer’s data and processes can be described as a modest variation on a shared template, then most of the work should be reusable. The implementation project becomes a matter of mapping ERP fields into the planning model, turning on the relevant features, tuning parameters, and teaching people how to use the screens.

In that world, customization is a necessary evil. It is accepted for “special” cases, but it is also recognized as the main source of delays, budget overruns, and long‑term complexity. Thus, the push for “configuration, not customization”—and more recently, for low‑code and no‑code tooling—to stay within the safe envelope of what the software already knows how to do.

Under these assumptions, the idea that packaged software is faster to deploy is perfectly reasonable.

The difficulty is that real supply chains rarely fit those assumptions.

Where projects really slow down: semantics, not screens

When you look closely at where time is actually spent in large supply chain system deployments, it is not on installing the software or even on training users. It is on understanding what the data means.

Most sizable companies have one or more ERPs, plus a scattering of other systems—CRM, WMS, TMS, PIM, e‑commerce, and so on. Together, they hold thousands of tables. Officially, these entities have documented meanings; in practice, their real semantics are the result of years of workarounds, local conventions, compromises, and partial cleanups. The true behavior of the system is encoded in how people use it, not in how the original vendor intended it to be used.

When a planning suite arrives, it brings its own data model and its own expectations. Even when it comes from the same vendor as the ERP, the semantics do not line up neatly. A field called “location type” or “safety stock” does not mean the same thing in configuration, in daily operations, in reporting, and in the planning engine.

Someone has to reconcile all this.

That someone is usually a mixed team of IT, consultants, and business stakeholders. They must decide which tables are authoritative, which fields are trustworthy, how to handle exceptions, and how to map the company’s messy reality into the clean structures expected by the planning software. They write extraction and transformation jobs; they add custom flags and attributes; they invent conventions to encode constraints the standard model cannot express.

This is the point where the supposedly “one‑click” deployment often turns into a sprawling integration effort. The software itself may be live on day one, but the data it needs—the precise, trustworthy, daily data that a serious optimization engine requires—takes months or years to truly stabilize.

This is not an implementation failure. It is a symptom of a deeper fact: semantics are local, and there is no such thing as a universal, ready‑to‑use representation of a given company’s supply chain.

Supply chain as software, not configuration

If we accept that semantics are local, and that they keep evolving as the business changes, then the usual distinction between “configuration” and “customization” becomes misleading.

Under the surface, an ambitious supply chain initiative is always a software exercise. It is the act of specifying, in a precise and executable form, how the company wishes to make decisions about purchasing, production, inventory, pricing, allocation, and so on, given the available data and constraints.

In the traditional packaged model, this software is made of configuration tables, parameters, workflow diagrams, custom connectors, and occasional “user exits” written in a general‑purpose language. The hope is that most of the logic can be expressed in configuration, and only the fringes require code.

In my experience, especially in complex environments, the opposite happens. The most critical and differentiating logic ends up being implemented in brittle ways: by overloading fields, by layering Excel sheets and Python notebooks next to the official system, by teaching planners to interpret dashboards in a specific way that is not actually encoded anywhere.

The net result is that the “system” is partly in the software and partly in people’s heads.

At Lokad, we decided more than a decade ago to embrace the software nature of the problem instead of fighting it. We built our own domain‑specific language, Envision, aimed at supply chain practitioners rather than professional software engineers. The idea is simple: represent all the data transformations, all the forecasts, all the constraints, and all the decisions as scripts that can be read, versioned, tested, and modified in a controlled way.

From the outside, this looks like a programming environment. Internally, it is our answer to the semantic problem: instead of forcing complex reality into an off‑the‑shelf model, we give ourselves a flexible language to describe that reality as it actually is.

This brings us back to speed.

What does it mean to be “fast” in supply chain?

If speed is defined as “time until the software vendor can declare a go‑live according to their checklist,” then preconfigured suites will often look faster. The project plan is optimized for that milestone. The canonical model is designed to be populated quickly. The training material and best practices are aligned with that specific goal.

However, if speed is defined as “time until we have a live, automated decision flow that we trust with real money,” the picture changes.

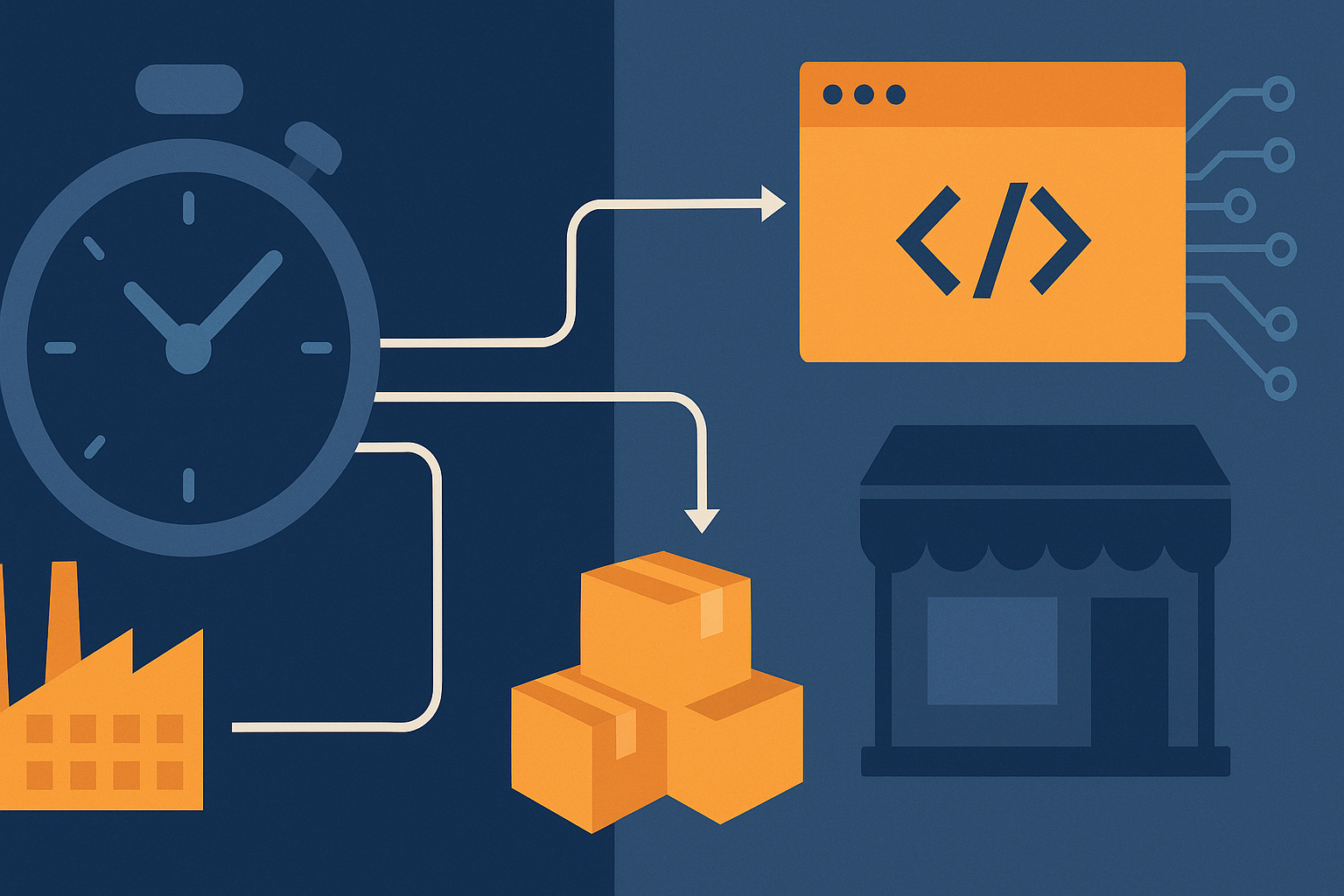

In a programmable approach, the project sequence typically looks like this:

First, set up reliable daily extractions from the existing systems, without trying to force them into a new canonical model. This is still hard work, but it is mostly about plumbing: get the raw data out, as it is, in all its ugliness.

Then, in the programmable environment, express the business logic step by step: cleaning and reconciling the data; expressing constraints and priorities; modeling uncertainty; and finally computing concrete decisions such as order quantities, production plans, or allocation choices. Because this logic is written in a tailored language, the cycle of change is short: a supply chain scientist can refine it daily or weekly as edge cases and new requirements appear.

Finally, put these decisions into dual‑run: compare what the scripts propose and what planners actually do, measure the financial impact, and adjust accordingly. When confidence is high enough, let the scripts take control for well‑selected parts of the portfolio.

The crucial point is that this sequence is iterative. At no point do we expect the first version of the logic to be perfect. Nor do we expect the environment to stand still. Instead, we assume that the decision rules will have to be rewritten substantially every year or two as the assortment, the network, the service promises, and the competitive landscape evolve.

In such a setting, the main determinant of “speed” is not the date of the first go‑live. It is the average time to change: how long it takes to adjust the system when the business decides to change how it operates.

If a price policy takes six months to encode in a monolithic planning suite, it is irrelevant that the original implementation finished on time three years ago.

Why programmability becomes faster over the full life of the system

Seen from the outside, programmability looks like a burden. Surely it must be slower to write code than to configure an existing screen?

In simple cases, it can be. If the supply chain is relatively small, stable, and close to the vendor’s reference model, then a preconfigured solution might indeed reach an acceptable steady state quickly. Many companies do not push their planning tools very hard; they use them as structured spreadsheets with nicer UIs and some alerts. In that context, the packaged approach can be adequate and, in a narrow sense, faster.

The picture changes dramatically as soon as we move to environments that are:

- large and heterogeneous (multiple ERPs, different business units, many kinds of products and channels),

- volatile (assortments, lead times, and service promises changing frequently),

- and ambitious (aiming for high degrees of automation, not just decision support).

In those cases, preconfigured solutions face three structural challenges.

First, the semantic gap is too wide. The more exceptions and local conventions there are, the more the canonical model needs to be bent to accommodate them. Each bend becomes a configuration trick, a custom extension, or a side process. Over time, the system accumulates layers of special cases that are difficult to understand and even harder to clean up.

Second, the cost of change is externally controlled. Changing the logic of a major planning suite usually involves multiple layers: internal IT, external consultants, sometimes the vendor itself. There are release cycles, testing protocols, and governance processes designed for safety, not agility. This is understandable, but it means that even sensible, moderate changes can take months.

Third, the logic itself ages quickly. Supply chain rules that made sense three years ago are rarely optimal today. When the cost of revisiting them is high, organizations tend to postpone the effort. They patch around the edges with spreadsheets, overrides, and firefighting.

In contrast, a programmable approach pays most of its cost upfront: you must accept that you are essentially writing software. But once you have a dedicated environment for this software—one that is specialized enough to be accessible to supply chain experts, yet expressive enough to describe the reality of the business—the economics of change flip.

Adding a new constraint, integrating a new data source, or revising the way you handle promotions becomes a matter of editing scripts rather than negotiating a new implementation phase. Because the decision logic is explicit, you can version it, test it, and roll it back. Because it is centralized rather than scattered across configuration tables and side files, you can reason about it as a whole.

Over the lifespan of the system, this ability to change quickly tends to dominate the one‑time benefit of a fast initial go‑live.

This is not a rejection of mainstream software

It would be a mistake to read this as an argument against all packaged supply chain software. These systems solve many problems well. They provide robust transaction processing; they integrate with a wide ecosystem; they offer user interfaces, security models, auditability, and compliance features that would be costly to reproduce from scratch.

Nor is this a call for each company to build everything in‑house using general‑purpose programming languages. That path quickly leads to its own kind of fragility, with bespoke scripts proliferating without discipline.

What I am advocating is different: a programmatic core for decision logic, surrounded by the best of packaged systems for everything else.

In other words, use your ERP, WMS, and TMS for what they are good at: recording what actually happened, enforcing simple business rules, and orchestrating workflows. Use specialized planning suites where their standard features genuinely fit your needs. But do not expect those packaged tools to be the place where your most important, most dynamic, most differentiating decision logic lives.

For that, you need something that accepts from the outset that your supply chain is unique, that it will change, and that every serious initiative will eventually run into semantic details that no template anticipated.

A domain‑specific language like Envision is one possible answer. It is the one we have built and refined at Lokad over many years, precisely to give supply chain experts the ability to express and maintain their own logic directly, without going through layers of intermediaries.

Other vendors have pursued similar ideas in different forms; what matters is not the label “DSL,” but the underlying principle: decision logic must be first‑class, programmable, and owned by the people who understand the economics of the business.

Rethinking speed

If we redefine speed as “how quickly can we put robust, automated decisions into production, and how quickly can we revise them whenever needed?,” the conclusion is unavoidable.

In the short term, preconfigured suites often look faster because they minimize visible work at the beginning and optimize for a ceremonial go‑live. In the medium and long term, their rigidity and the cost of change make them slower precisely where speed is most needed: when the business environment shifts.

A programmatic approach accepts more work upfront, but it pays dividends every time reality departs from the initial assumptions—which is to say, all the time. In a world where uncertainty is the norm rather than the exception, that kind of speed matters more than the number of weeks on an implementation slide.

The question, then, is not whether you want to write software for your supply chain. If your business is large and complex enough, you already are—through configuration, through spreadsheets, through local scripts and manual conventions. The only real question is whether you want that software to be explicit, coherent, and under your control.

My answer is clear: if we care about speed in any meaningful sense, the supply chain must be programmable.