Better promotion forecasts in retail

Since our major Tags+Events upgrade last fall, we have been very actively working on promotion forecasting for retail. We have now thousands of promotional events in our databases; and the analysis of those events has lead us to very interesting findings.

Also it’s hardly surprising, we have found that:

- promotion forecasts when performed manually by practitioners are usually involving forecast errors above 60% in average. Your mileage may vary, but typical sales forecast errors in retail are usually closer to 20%.

- including promotion data through tags and events reduces the average forecast error by roughly 50%. Again your mileage may vary depending the amount of data that you have on your promotional events.

As a less intuitive result, we have also found that rule-based methods and linear methods, although widely advertised by some experts and some software tools, are very weak against overfitting, and can distort the evaluation of the forecast error, leading to a false impression of performance in promotion forecasting.

Also, note that this 50% improvement has been achieved with usually quite a limited amount of information, usually no more than 2 or 3 binary descriptor per promotion.

Even crude data about your promotions are leading to significant forecast improvements, which turns into significant working capital savings.

The first step to improve your promotion forecasts consists in gathering accurate promotion data. In our experience, this step is the most difficult and the most costly one. If you do not have accurate records of your promotions, then there is little hope to get accurate forecasts. As people says, Garbage In, Garbage Out.

Yet, we did notice that even a single promotion descriptor, a binary variable that just indicates whether the article is currently promoted or not, can lead to a significant forecast improvement. Thus, although your records need to be accurate, they don’t need to be detailed to improve your forecasts.

Thus, we advise you to keep track precisely of the timing of your promotions: when did it start? when did it end? Note that for eCommerce, front page display has often an effect comparable to a product promotion, thus you need to keep track of the evolution of your front page.

Then, article description matters. Indeed, in our experience, even the most frequently promoted articles are not going to have more than a dozen promotions in their market lifetime. In average, the amount of past known promotions for a given article is ridiculously low, ranging from zero to one past promotion in average. As a result, you can’t expect any reliable results by focusing on the past promotions a single product at a time, because, most of the time there isn’t any.

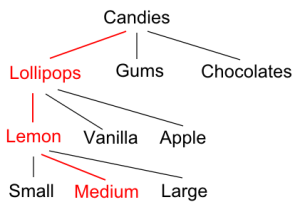

So instead, you have to focus on articles that look alike the article that you are planning to promote. With Lokad, you can do that by associating tags to your sales. Typically, retailers are using a hierarchy to organize their catalog. Think of an article hierarchy with families, sub-families, articles, variants, etc.

Translating a hierarchical catalog into tags can be done quite simply following the process illustrated below for a fictitious candy reseller:

The tags associated with the sales history of medium lemon lollipops would be LOLLIPOPS, LEMON, MEDIUM

This process will typically create 2 to 6 tags per article in your catalog - depending on the complexity of your catalog.

We have said that even very limited information about your promotions could be used to improve your sales forecasts right away. Yet, more detailed promotion information clearly improves the forecast accuracy.

We have found that two items are very valuable to improve the forecast accuracy:

- the mechanism that describes the nature of the discount offered to your customers. Typical mechanisms are flat discount (ex. -20%) but there are many other mechanisms such as free shipping or discount for larger quantities (ex: buy one and get one for free).

- the communication that describes how your customers get notified of the promotional event. Typically, communication includes marketing operations such as radio, newspaper or local ads, but also the custom packaging (if any) and the visibility of promoted articles within the point of sales.

In case of larger distribution networks, the overall availability of the promotion should also be described if articles aren’t promoted everywhere. Such situation typically arises if point of sales managers can opt out from promotional operations.

Discussing with professionals, we have found that many retailers are expecting a set of rules to be produced by Lokad; and those rules are expected to explain promotions such as

IF TV_ADS AND PERCENT25_DISCOUNT

THEN PROMO_SALES = 5 * REGULAR_SALES;

Basically, those expected rules always follow more or less the same patterns:

- A set of binary conditions that defines the scope of the rule.

- A set of linear coefficients to estimate the effect of the rule.

We have found that many tools in the software market are available to help you to discover those rules in your data; which, seemingly, has lead many people to believe that this approach was the only one available.

Yet, according to our experiments, rule-based methods are far from being optimal. Worse, those rules are really weak against overfitting. This weakness frequently lead to painful situations where there is a significant gap between estimated forecast accuracy and real forecast accuracy.

Overfitting is a very subtle, and yet, very important, phenomenon in statistical forecasting. Basically, the central issue in forecasting is that you want to build of model that is very accurate against the data you don’t have.

In particular, the statistical theory indicates that it is possible to build models that happen to be very accurate when applied to the historical data, and still very inaccurate to predict the future. The problem is that, in practice, if you do not carefully think of the overfitting problem beforehand, building such a model is not a mere possibility, but the most probable outcome of your process.

Thus, you really need to optimize your model against the data you don’t have. Yet, this problem looks like a complete paradox, because, by definition, you can’t measure anything if you don’t have the corresponding data. And we have found that many professionals gave up on this issue, because it doesn’t look like a tractable thinking anyway.

Our advice is: DON’T GIVE UP

The core issue with those rules is that they perform too well on historical data. Each rule you add is mechanically reducing the forecast error that you are measuring on your historical data. If you add enough rules, you end-up with an apparent near-zero forecasting error. Yet, the empirical error that you measure on your historical data is an artifact of the process used to build the rules in the first place. Zero forecast error on historical data does not translate itself into zero forecast error on future promotions. Quite the opposite in fact, as such models tend to perform very poorly on future promotions.

Although, optimizing for the data you don’t have is hard, the statistical learning theory offers both theoretical understanding and practical solutions to this problem. The central idea consists of introducing the notion of structural risk minimization which balances the empirical error.

This will be discussed in a later post, stay tuned.

(Shameless plug) Many of those modern solutions, i.e. mathematical models that happen to be careful about the overfitting issue, have been implemented by Lokad, so that you don’t have to hire a team of experts to benefit from them.

Reader Comments (1)

Handling demand-signal data presents the same problems real-time data causes in any industry: how to access and integrate high volumes of data, and then combine and analyze it alongside historical information.

Business Opportunities (9 years ago)