An opinionated review of Deep Inventory Management

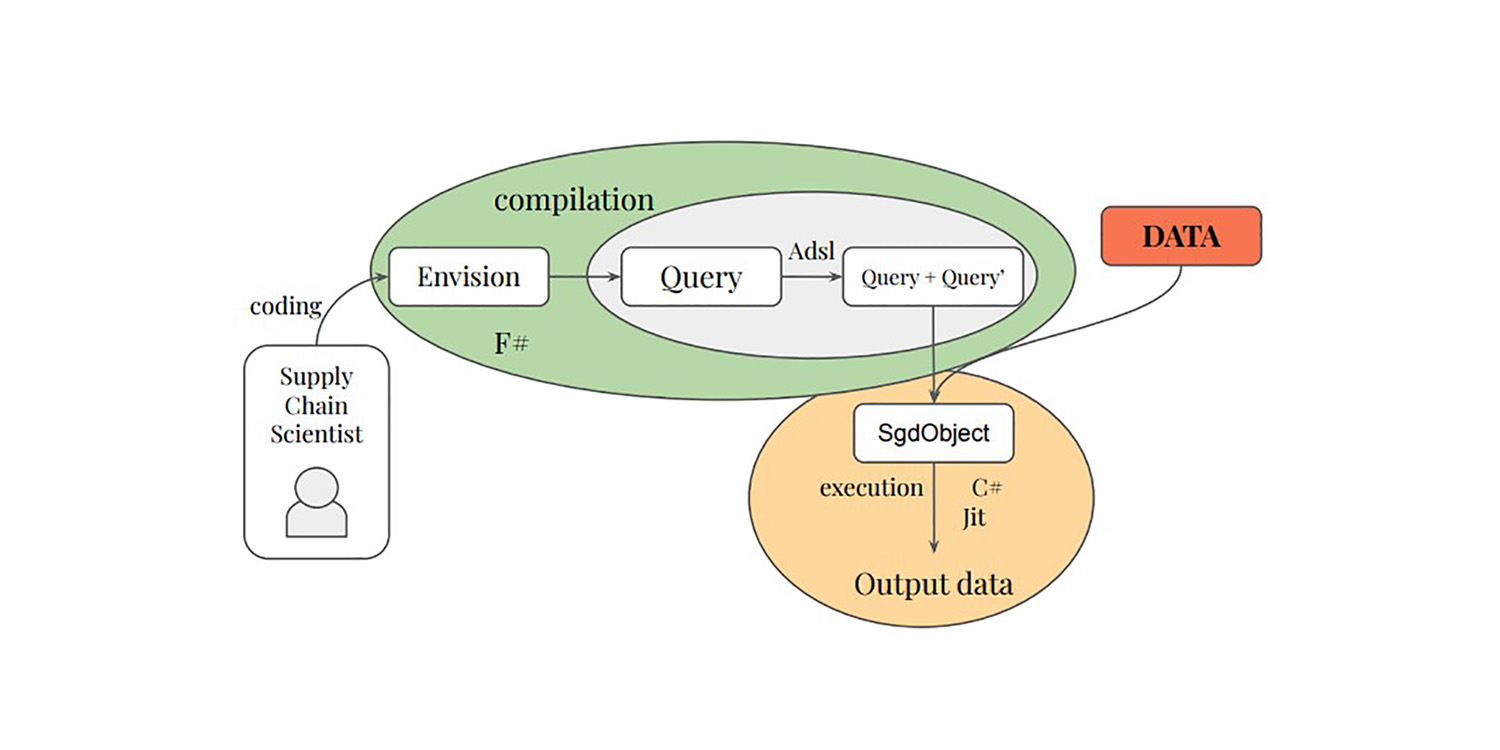

In late 2022, a team at Amazon published supply chain-related research titled Deep Inventory Management1. This paper presents an inventory optimization technique (referred to as DIM in the following) that features both reinforcement learning and deep learning. The paper claims that the technique has been used successfully over 10,000 SKUs in real-world settings. This paper is interesting on multiple fronts, and is somewhat similar to what Lokad has been doing since 2018. In the following, I discuss what I see as the merits and demerits of the DIM technique from the specific vantage viewpoint of Lokad, as we explored similar venues in the last few years.

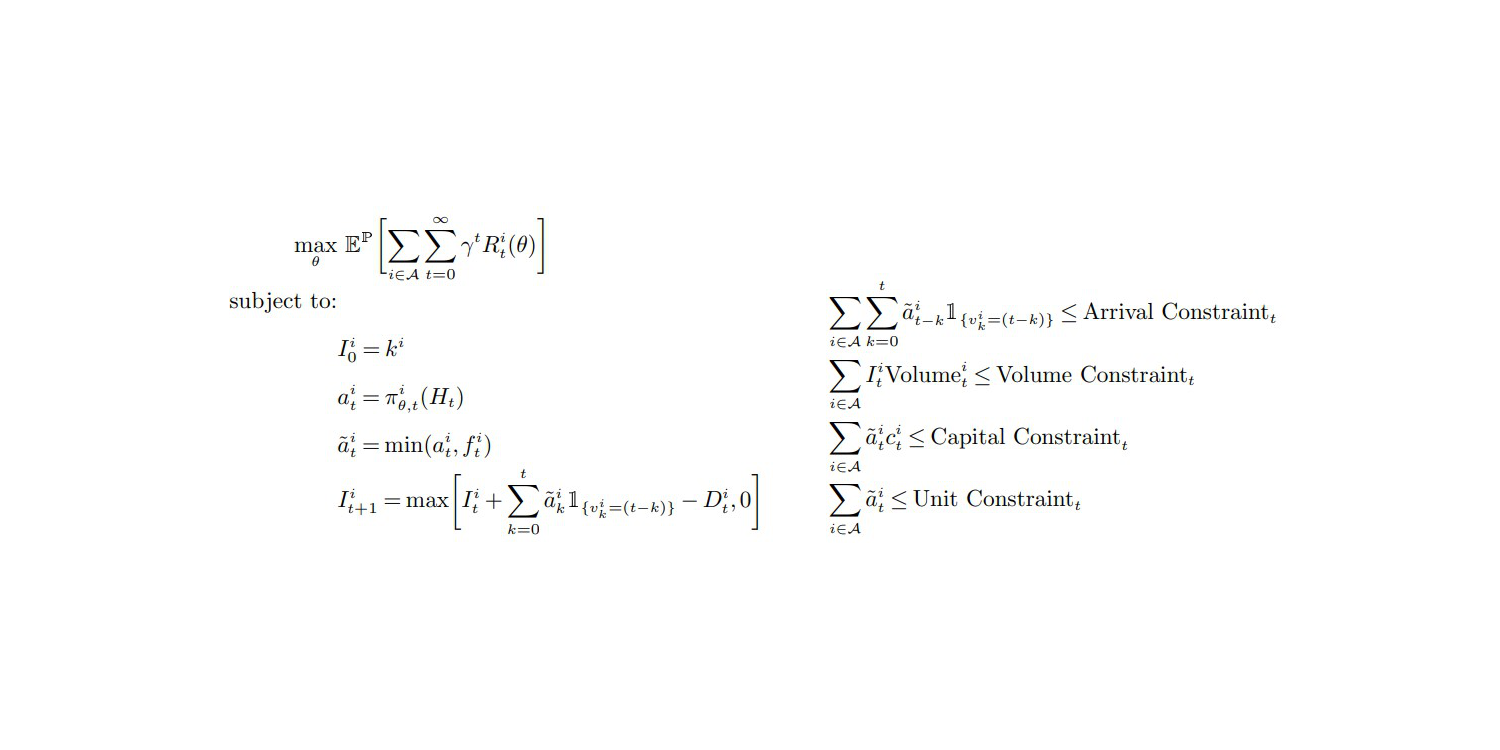

The Objective Function and Constraints (p.27, Appendix A), from "Deep Inventory Management", Nov. 2022

My first observation is that this paper rings true, and I am thus inclined to support its findings. The overall setup resonates quite strongly with my own experiments and observations. Indeed, most papers published about supply chain are just bogus - for one reason or another. Supply chains face quite a severe case of epistemic corruption,2 and deep skepticism should be the default position when confronted with any supposedly “better” way of approaching a supply chain problem.

The most notable contribution of the DIM technique is to entirely bypass the forecasting stage and go straight for inventory optimization. The classical way to approach inventory optimization consists of decomposing the problem into two stages. First, forecast the demand; second, optimize the inventory decision. Lokad still follows this staged process (for good reasons, see the action reward 3). However, DIM merges the two through an approach referred to as differentiable simulators.

Merging the “learning” and “optimization” stages is a promising path, not just for supply chain, but for computer science as a whole. For the last two decades, there has been a gradual convergence between learning and optimization from an algorithmic perspective. As a matter of fact, the primary learning technique used by Lokad has an optimization algorithm at its core. Conversely, a recent breakthrough (unpublished) of Lokad’s on stochastic optimization centres around a learning algorithm.

I envision a future where stand-alone forecasting is treated like an obsolete practice that has been entirely superseded by new techniques; ones that entirely fuse the perspectives of “learning” and “optimization”. Lokad has already been walking along this path for some time. In fact, ever since we moved to probabilistic forecasts back in 2015, exporting the raw forecasts out of Lokad has been considered impractical, hence mostly collapsing the process to a single-stage one from the client perspective. However, the two-stage process still exists within Lokad because there are some deep, still unsolved, problems for the unification to happen.

Now, let’s discuss my views of the shortcomings of the DIM technique.

My first criticism is that the use of deep learning by DIM is underwhelming.

From the Featurization (Appendix B) section, it’s clear that what the “deep” model is learning first and foremost is to predict future lead demand - that is, the varying demand integrated over the varying lead time.

The (implicitly probabilistic) estimation of lead demand is not a “tough” problem that requires deep learning, at least not in the settings presented by this Amazon team. In fact, I wager that the whole empirical improvement is the consequence of a better lead demand assessment. Furthermore, I would also wager that a comparable-if-not-better assessment of the lead demand can be obtained with a basic parametric probabilistic model, as was done in the M5 competition4. This would entirely remove deep learning from the picture, keeping only the “shallow” differentiable programming portion of the solution.

If we set aside the estimation of lead demand, DIM has little to offer. Indeed, in the supply chain settings as presented in the paper, all SKUs are processed in quasi-isolation with overly mild company-wide constraints - namely caps on total volume, total capital, and total units. Addressing those upper bounds can be done quite easily, sorting the units to be reordered5 by their decreasing dollar-on-dollar returns - or possibly their dollar-on-unit returns - if the capacity of the chaotic storage used by Amazon is the true bottleneck.

As a far as constraints go, company-wide caps are trivial constraints that don’t require sophisticated techniques to be addressed. Deep learning would really shine if the authors were able to address thorny constraints which abound in supply chains. For example, MOQs (minimum order quantities) defined at the supplier level, full truck loads, supplier price breaks, perishable products, etc., are concerns that can’t be addressed through naïve technique like the prioritization that I mentioned above. For such thorny constraints, deep learning would really excel as a versatile stochastic optimizer – granted that someone succeeds in doing so. However, DIM entirely sidesteps such concerns and it’s wholly unclear if DIM could be extended to cope with such issues. My take is that it can’t.

To the credit of the authors, cross-product constraints are mentioned in the very last line of their conclusion as an exciting avenue of research. While I do agree with the sentiment, it’s an under-statement. Not supporting those ubiquitous supply chain constraints is an immediate showstopper. Supply chain practitioners would revert to their spreadsheets in less than a month. Approximately correct is better than exactly wrong.

Moreover, we have a whole can of worms with the real-valued actions, i.e. fractional order quantities, as produced by DIM – see equation (1) and Assumption 1 (page 12). Indeed, in supply chain, you can’t reorder 0.123 units, it’s either 0 or 1. Yet, the authors sidestep the whole issue. The DIM technique outputs fractional quantities and requires the reward function to be “well behaved”. In practice, it’s clear that this approach is not going to work well if the reward function isn’t strictly monotonous with regards to the ordered quantity.

Thus, we are left with a less-than-desirable feature (fractional orders) and a less than-desirable requirement (monotony of the reward function), the combination of the two being the cornerstone of the proposed differentiable simulator. Yet, supply chain is ruled by the law of small numbers6. Modern inventory problems are dominated by their discrete characteristics. At the very least, this aspect should have been singled out by the authors as a severe limitation of DIM - something to be pursued in subsequent research.

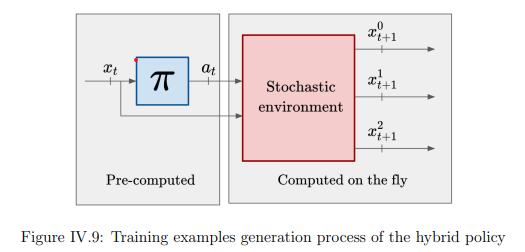

Blending gradients and discrete policies is a fundamental problem for stochastic optimization, not just the proposed differentiable simulators. Indeed, the stochastic gradient descent (SGD) operates on real-valued parameters, and, as such, it is not obvious how to optimize policies that govern fundamentally discrete decisions.

Operating over fundamentally discrete spaces through gradient-driven processes can certainly be done, as brilliantly demonstrated by LLMs (large language models), but it takes a whole bag of tricks. Until the equivalent tricks are uncovered for the class of situations faced by supply chains, differentiable simulators are a promising idea, not a production-grade option.

My second criticism is that there are tons of edge cases that are not even mentioned by the DIM authors.

In particular, the authors remain eminently vague on how they have selected (…cherry-picked) their 10,000 SKUs. Indeed, while I was carrying experiments at Lokad back in 2018 and 2019, I have been using eerily similar featurization strategies (Appendix B) for deep learning models used by Lokad.

From those experiments, I propose that:

- New and recent products won’t work well, as the rescaling hinted by the equations (13), (30) and (31) will behave erratically when historical data is too thin.

- Slow movers will stumble upon improper remediations of their past stock-outs, as the technique assumes that “reasonable” corrected demand exists (not the case for slow movers).

- Intermittent products (unpublished or unavailable for long periods, like 2+ months) will also stumble over the supposedly corrected demand.

- Rivals’ SKUs, where customers aggressively pick the lowest price, will get under-appreciated, as the model cannot reflect the drastic impact of when SKUs overtake (price-wise) a rival.

Those edge cases are, in fact, the bulk of the supply chain challenge. In a paper, it is tempting to cherry pick SKUs that are nicely behaved: not too recent, not too slow, not too erratic, not intermittent, etc. Yet, if we have to resort to sophisticated techniques, it’s a tad moot to focus on the easy SKUs. While economic improvements can be achieved on those SKUs, the absolute gain is minor (modest at best) – precisely because those SKUs are nicely behaved anyway. The bulk of the supply chain inefficiencies lies in the extremes, not the middle.

Frontally addressing those ill-behaved SKUs is exactly where deep learning would be expected to come to the rescue. Alas, DIM does the opposite and tackles the nicely behaved SKUs that can be approached with vastly less sophisticated techniques with little or no downside.

My third criticism is that DIM has a somewhat convoluted technical setup.

This is probably one of the most under-appreciated issues in the data science community. Complexity is the enemy of reliability and efficiency. While deep learning is fantastic, few companies can afford the engineers it takes to operate a setup like DIM. This is not like ChatGPT where all the engineering shenanigans are mutualized across the entire customer base of the software vendor. Here, considering the amount of specifics that goes into DIM, every client company has to bear the full operating costs associated with their own instance of the solution.

On the hardware side, we have an EC2 p3.16xlarge7 virtual machine, presently priced at 17k USD / month on AWS. For 10,000 SKUs, that’s… steep.

Lokad has many clients that individually operate millions of SKUs, and most of them have less than 1 billion USD in turnover. While it might be possible to downsize a bit this VM, and to shut it down when unused, at Lokad, we have learned that those options rarely qualify for production.

For example, cloud computing platforms do face shortages of their own: sometimes, the VM that was supposed to be available on-demand takes hours to come online. Also, never assume that those models can just be “pretrained”, there will be a day - like next Tuesday - where the whole thing has to be retrained from scratch for some imperative reasons8. Moreover, a production-grade setup not only requires redundancy but also extra environments (testing, pre-production, etc.).

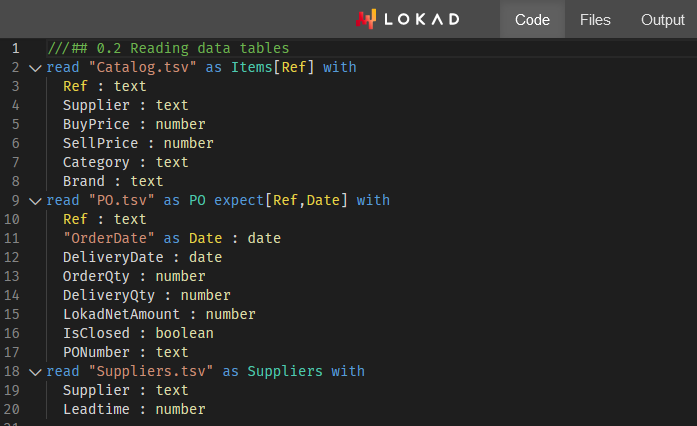

On the software side, the need for something like the Plasma Object Store is the archetype of those accidental complications that come with deep learning. Let’s consider that the training dataset - with 80,000 SKUs weekly-aggregated over just 104 weeks - should weigh less that 100MB (if the data is sanely represented).

While the DIM authors remain artfully vague, referring to a “large amount of data” (page 32), it’s obvious that the featurization strategy inflates the original data footprint by 3 orders of magnitude (1000x, or thereabouts). Bear in mind, the EC2 p3.16xlarge boasts no less than 488 GB of RAM, which should be sufficient to process a 100mb dataset (approx. 100 GB after the inflation is applied)… Well, been there, done that, and faced the same problem.

For example, a realistically-sized supply chain dataset would usually exceed 1 terabyte after data inflation - as required by the DIM approach. In this case, a typical data scientist cannot reproduce a bug locally because their workstation only has 64GB of RAM. Beyond that, there is also the matter of the Python/Plasma frontier where things can go wrong.

Beyond the primary criticisms above, there are secondary concerns. For example, dynamic programming9 - mentioned in the introduction and conclusion as the baseline and DIM contender - is just a poor baseline. Dynamic programming is an ancient technique (dating from the 1950s) and does not reflect the state of the art as far as blending optimization and learning is concerned.

Granted, the supply chain literature is lacking on this front, but that means authors have to find relevant baselines outside their field(s) of study. For example, AlphaGo Zero10 is a much better intellectual baseline when it comes to a remarkable application of deep learning for optimization purposes - certainly when compared to dynamic programming techniques that are almost 80 years old.

In conclusion, contrary to what my critique might suggest, it’s a better paper than most and absolutely worthy of being critiqued. Differentiable programming is a great instrument for supply chains. Lokad has been using it for years, but we have not exhausted what can be done with this programmatic paradigm.

There is more to explore, as demonstrated by DIM. Differentiable simulators are a cool idea, and it feels less lonely when tech giants like Amazon challenge the core dogmas of the mainstream supply chain theory – just like we do. At Lokad, we have the project to, somehow, blend montecarlo and autodiff11 in ways that would nicely fit those differentiable simulators.

Stay tuned!

-

Deep Inventory Management, Dhruv Madeka, Kari Torkkola, Carson Eisenach, Anna Luo, Dean P. Foster, Sham M. Kakade, November 2022. ↩︎

-

Adversarial Market Research for Enterprise Software, Lecture by Joannes Vermorel, March 2021. ↩︎

-

Action reward, a framework for inventory optimization, Gaëtan Delétoile, March 2021. ↩︎

-

No1 at the SKU-level in the M5 forecasting competition, Lecture by Joannes Vermorel, January 2022. ↩︎

-

Retail stock allocation with probabilistic forecasts, Lecture by Joannes Vermorel, May 2022. ↩︎

-

Quantitative principles for supply chains, Lecture by Joannes Vermorel, January 2021. ↩︎

-

A powerful server rented online from Amazon with 8 high-end professional GPUs and about 15x more RAM than a typical high-end desktop workstation. ↩︎

-

“SCO is not your average software product” in Product-oriented delivery for supply chain, Lecture by Joannes Vermorel, December 2020. ↩︎

-

Dynamic programming should have been named “structured memoization”. As a low-level algorithmic technique, it is still very much relevant, but this technique doesn’t even really belong to the same realm than reinforcement learning. Structured memoization, as a technique, belongs to the realm of the basic/fundamental algorithmic tricks, like balanced trees or sparse matrices. ↩︎

-

Mastering Chess and Shogi by Self-Play with a General Reinforcement Learning Algorithm, David Silver, Thomas Hubert, Julian Schrittwieser, Ioannis Antonoglou, Matthew Lai, Arthur Guez, Marc Lanctot, Laurent Sifre, Dharshan Kumaran, Thore Graepel, Timothy Lillicrap, Karen Simonyan, Demis Hassabis, December 2017. ↩︎

-

Both montecarlo and autodiff are special programmatic blocks in Envision, supporting randomized processes and differentiable processes, respectively. Combining the two basically gives something that is really close to the building blocks that a differentiable simulator would require. ↩︎