Probabilistic Exponential Smoothing for Explainable AI in the Supply Chain domain

One of Lokad’s venues of research consists of revisiting something simple (and fundamental) and trying to make it a bit better (and a bit simpler). This approach tends to be the exact opposite of most research papers, which happen to be more complex versions of some prior work. Thus, by default, we strive for greater simplicity rather than greater sophistication. One of Lokad’s R&D engineers, Antonio Cifonelli, applied this approach in his PhD dissertation, tackling the venerable model of exponential smoothing to give it better attributes—namely, making it more explainable.

Explainability, as a quality for a model, is frequently misunderstood both in nature and in intent—at least as far as supply chains are concerned. Having an explainable model is not about getting results that can be readily understood or validated by a human mind. This would be very flawed given half a dozen floating point operations are already sufficient to confuse most people, including those who consider themselves at ease with numbers. The human mind is simply not (naturally) adept when it comes basic arithmetic; it was, after all, the first domain to be overtaken by machines during the 1950s. Also, explainability is not intended to make the model trustworthy—well, not quite. There are more reliable instruments (backtesting, cross-validation, etc.) to assess whether a model should be trusted or not. Being able to spin a narrative about the model and its results should not be considered sufficient grounds for trust.

From the Lokad’s perspective, the point of explainability is to preserve the possibility to operate the supply chain through a variant of the model when confronted with a disruption that invalidates the original model. Supply chain disruptions are varied: wars, tariffs, lockdowns, bankruptcies, storms, litigations, etc. When a disruption happens, the predictive model becomes invalid in subtle but important ways. However, this does not mean all the patterns ever captured by the model cease to be relevant. For example, the model’s seasonality profile may remain entirely unimpacted.

As such, an explainable model should provide ways to a Supply Chain Scientist to modify, tweak, or distort the original model in order to obtain a variant that will adequately reflect the disruption. Naturally, these changes are expected to be guesstimates—heuristic in nature—as precise data are not available yet. Nevertheless, as Lokad’s adopted mantra goes, it is better to be approximately correct than exactly wrong.

For further details about what explainable models might look like, check out Dr Cifonelli’s PhD manuscript below.

Author: Antonio Cifonelli

Date: December 2023

Abstract:

The key role that AI could play in improving business operations has been known for a long time (at least since 2017), but the penetration process of this new technology has encountered certain obstacles within companies, in particular, implementation costs. Companies remain attached to their old systems because of the energy and money required to replace them. On average, it takes 2.8 years from supplier selection to full deployment of a new solution. There are three fundamental points to consider when developing a new model. Misalignment of expectations, the need for understanding and explanation, and performance and reliability issues. In the case of models dealing with supply chain data, there are five additionally specific issues:

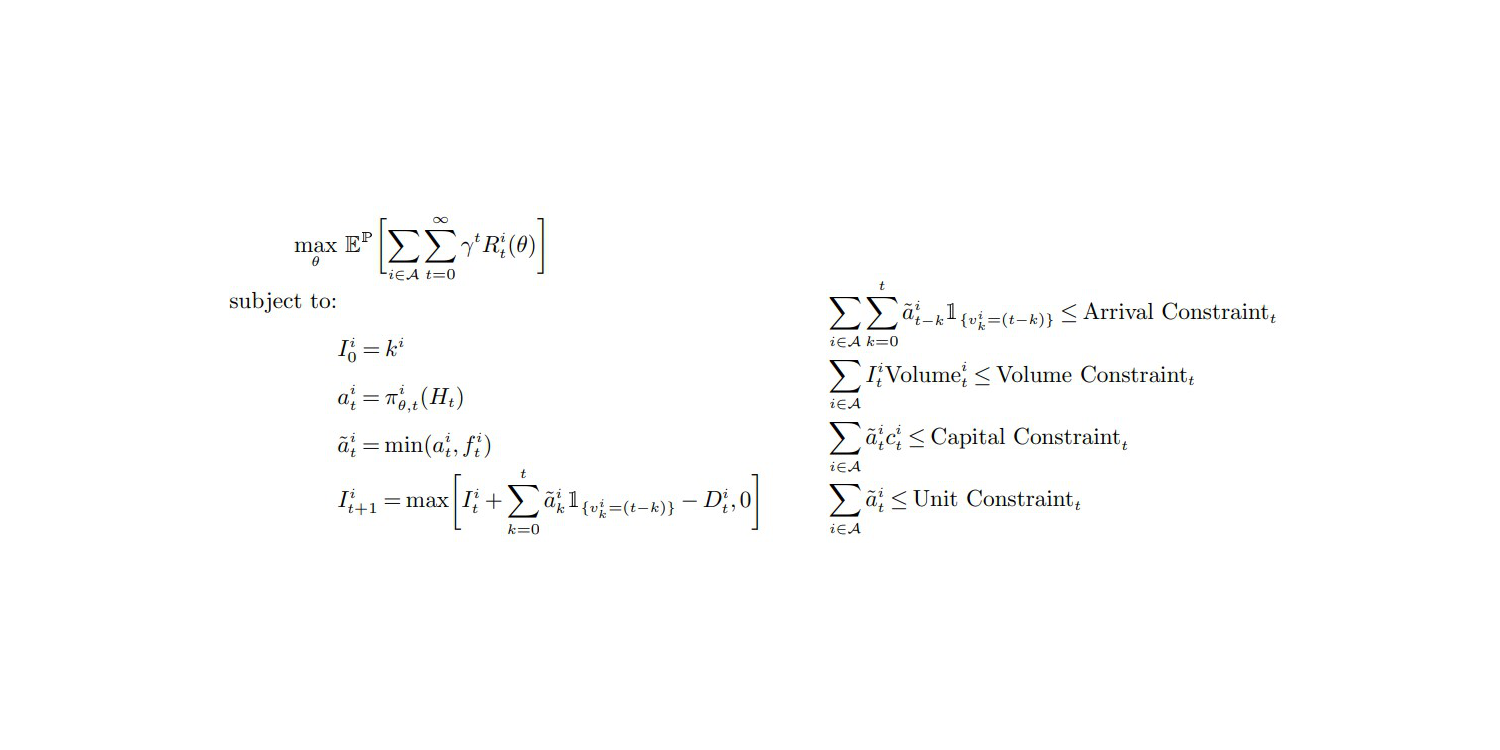

- Managing uncertainty. Precision is not everything. Decision-makers are looking for a way to minimize the risk associated with each decision they have to make in the presence of uncertainty. Obtaining an exact forecast is a advantageous; obtaining a fairly accurate forecast and calculating its limits is realistic and appropriate.

- Handling integer and positive data. Most items sold in retail cannot be sold in subunits, for example, a can of food, a spare part or a t-shirt. This simple aspect of selling, results in a constraint that must be satisfied by the result of any given method or model: the result must be a positive integer.

- Observability. Customer demand cannot be measured directly, only sales can be recorded and used as a proxy for demand.

- Scarcity and parsimony. Sales are a discontinuous quantity: a product may sell well one week, then not at all the next. By recording sales by day, an entire year is condensed into just 365 (or 366) points. What’s more, a large proportion of them will be zero.

- Just-in-time optimization. Forecasting is a key function, but it is only one element in a chain of processes supporting decision-making. Time is a precious resource that cannot be devoted entirely to a single function. The decision-making process and associated adaptations must therefore be carried out within a limited time frame, and in a sufficiently flexible manner to be able to be interrupted and restarted if necessary in order to incorporate unexpected events or necessary adjustments.

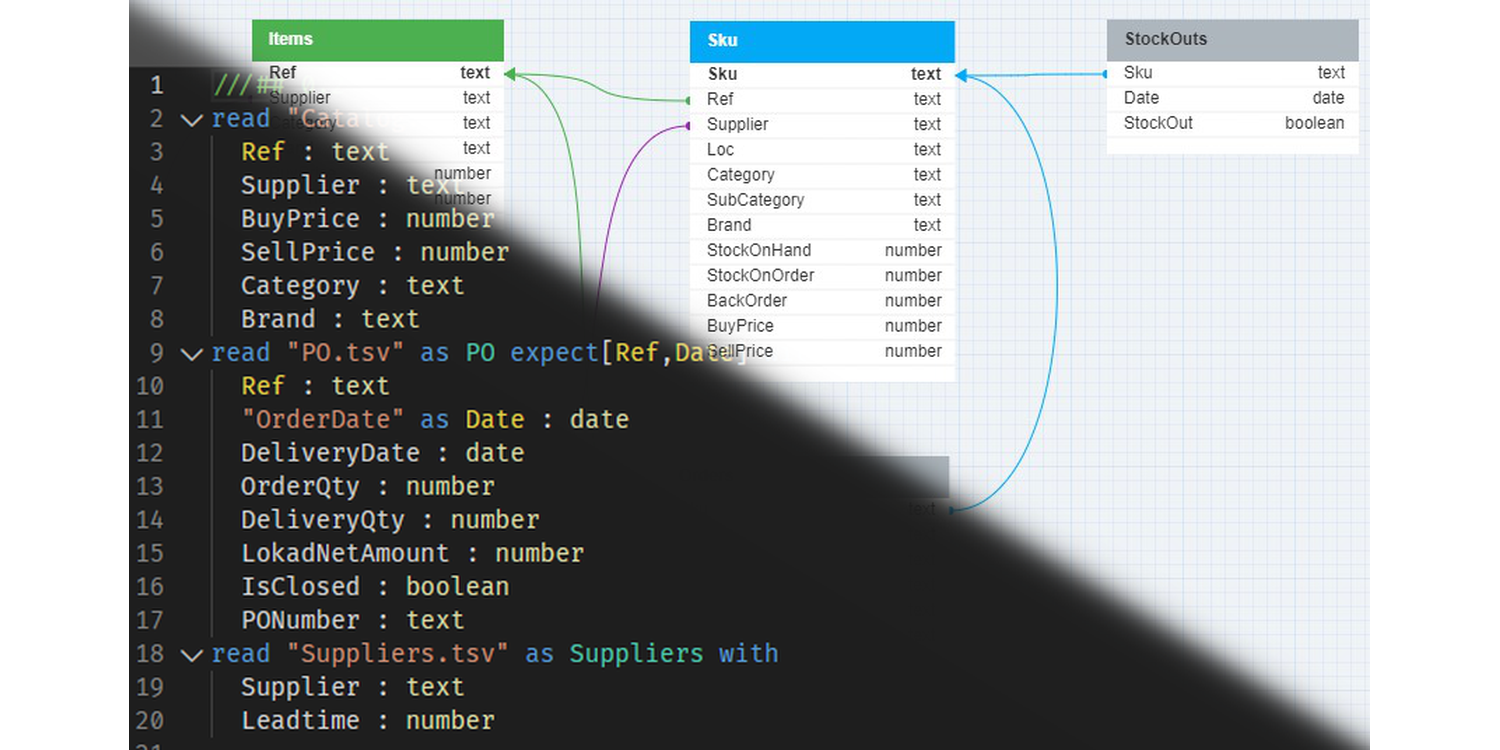

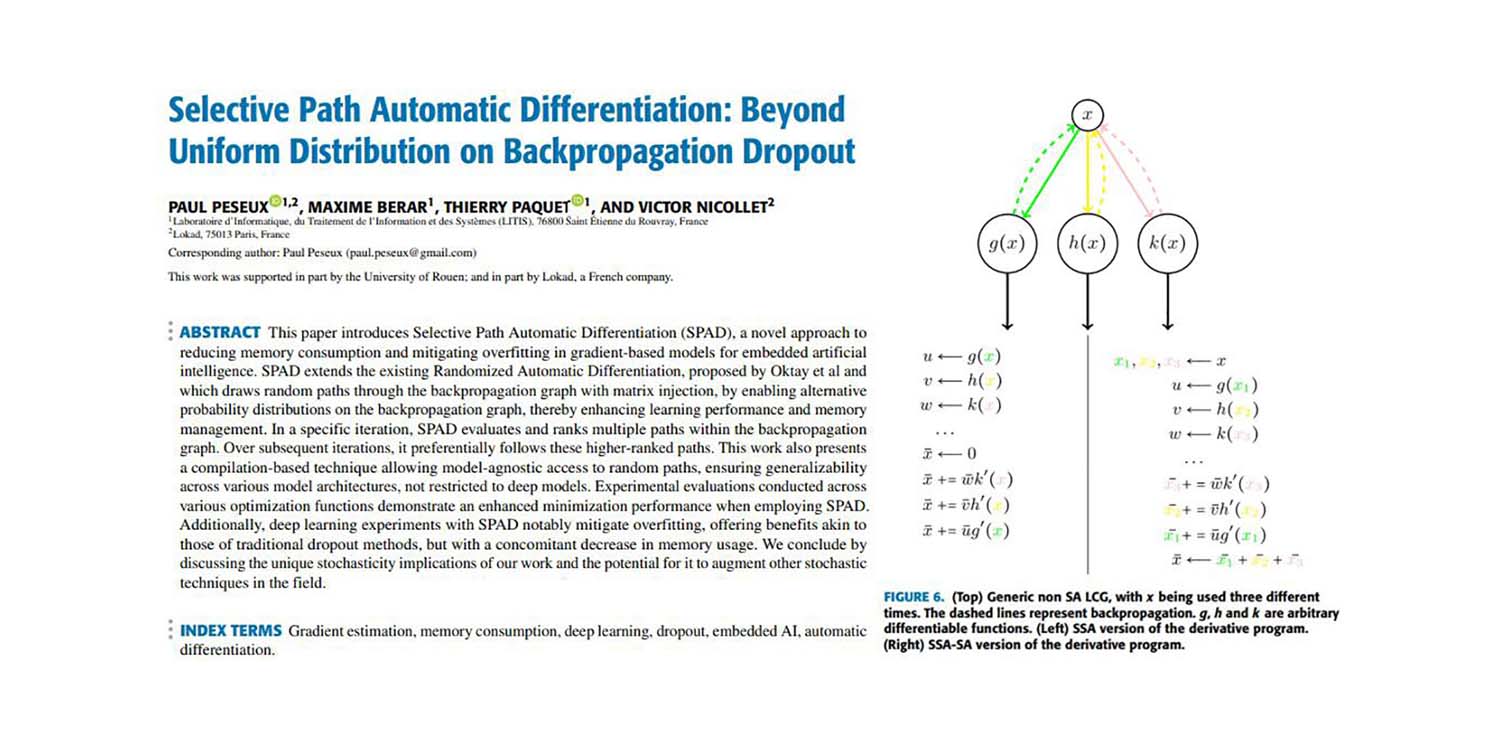

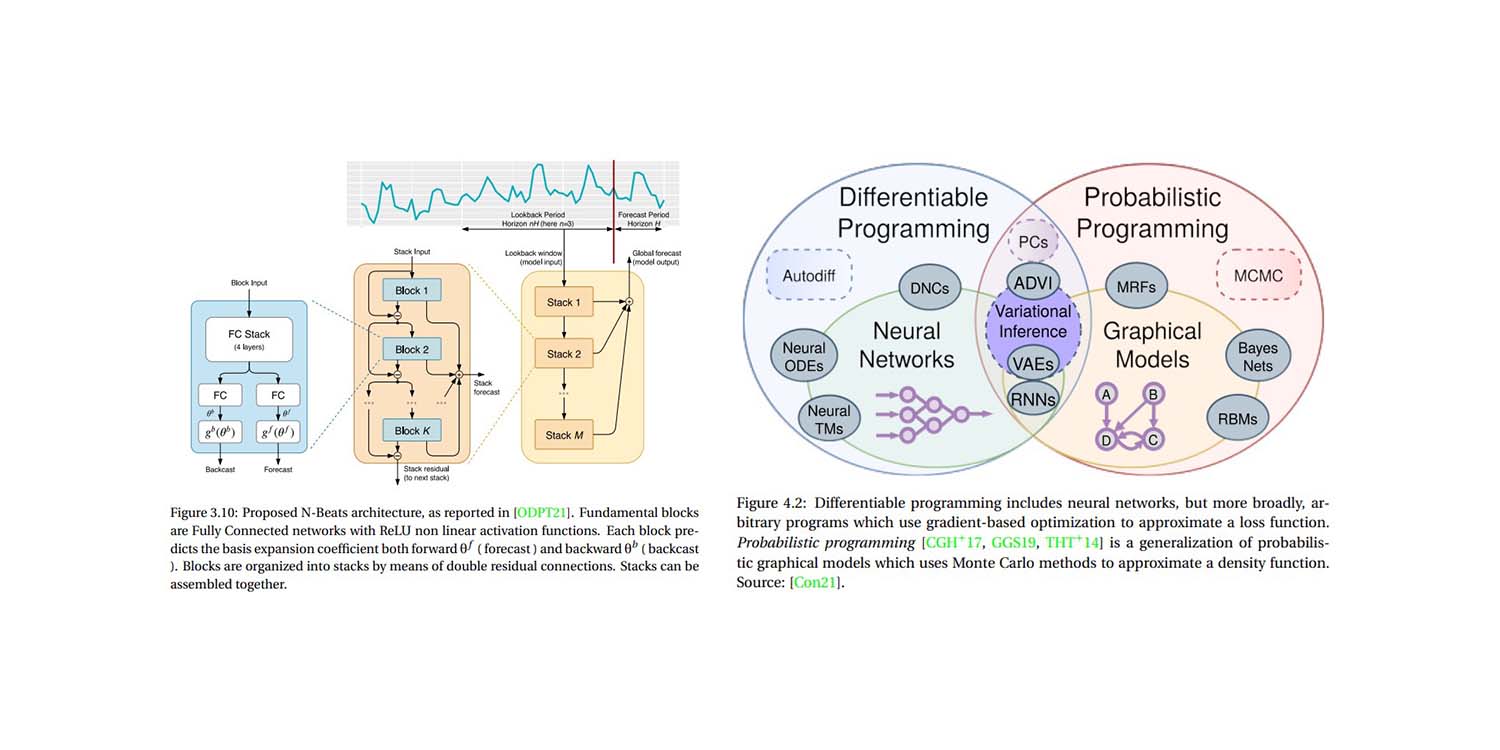

This thesis fits into this context and is the result of the work carried out at the heart of Lokad, a Paris-based software company aiming to bridge the gap between technology and the supply chain. The doctoral research was funded by Lokad in collaboration with the ANRT under a CIFRE contract. The proposed work aims to be a good compromise between new technologies and business expectations, addressing the various aspects presented above. We have started forecasting using basic methods - the exponential smoothing family - which are easy to implement and extremely fast to run. As they are widely used in the industry, they have already won the confidence of users. What’s more, they are easy to understand and explain to an unlettered audience. By exploiting more advanced AI techniques, some of the limitations of the models used can be overcome. Cross-learning proved to be a relevant approach for extrapolating useful information when the number of available data was very limited. Since the common Gaussian assumption is not suitable for discrete sales data, we proposed using a model associated with either a Poisson distribution or a Negative Binomial one, which better corresponds to the nature of the phenomena we are seeking to model and predict. We also proposed using simulation to deal with uncertainty. Using Monte Carlo simulations, a number of scenarios are generated, sampled and modelled using a distribution. From this distribution, confidence intervals of different and adapted sizes can be deduced. Using real company data, we compared our approach with state-of-the-art methods such as the Deep Auto-Regressive (DeepAR) model, the Deep State Space (DeepSSMs) model and the Neural Basis Expansion Analysis (N-Beats) model. We deduced a new model based on the Holt-Winter method. These models were implemented in Lokad’s work flow.

Jury:

The defense took place in front of a jury composed of:

- M. Massih-Reza AMINI, Professor at Université Grenoble, Alpes, Rapporteur.

- M.me Mireille BATTON-HUBERT, Professor at École des Mines de Saint-Étienne, Rapporteur.

- M.me Samia AINOUZ, Professor at INSA Rouen, Normandie , Examiner.

- M. Stéphane CANU, Professor at INSA-Rouen, Normandie, Thesis director.

- M.me Sylvie LE HÉGARAT-MASCLE, Professor at Paris-Saclay, Examiner.

- M. Joannes VERMOREL, CEO of Lokad, Invited member.